Symptoms

It takes a very long time to load a data warehouse on Oracle database with Kettle when the language in the Windows Region and Language settings is different from English (United States).

Cause

- Oracle JDBC driver used by Kettle issues command ALTER SESSION SET NLS_LANGUAGE and changes default database language.

- Changing NLS_LANGUAGE also changes oracle parameter NLS_SORT from BINARY to the specified language.

- When NLS_COMP is equal to ANSI and the value of NLS_SORT is not BINARY, the linguistic comparison is used for WHERE statements.

- When the value of NLS_COMP is different from BINARY a full scan is used for VARCHAR2 fields instead of index range scan.

Resolution

Add “-Duser.country=en -Duser.language=en” to java runtime options in Kettle batch files.

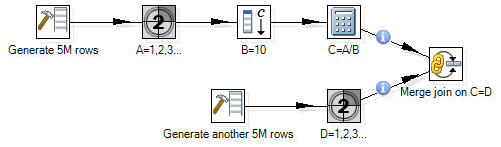

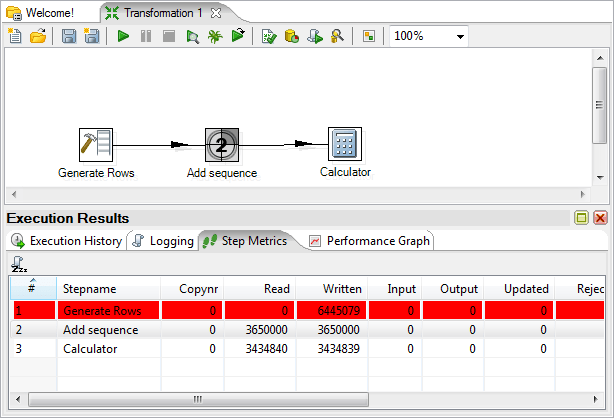

Experiment

When NLS_SORT is BINARY, the index is used.

SELECT PARAMETER, VALUE FROM V$NLS_PARAMETERS

WHERE PARAMETER IN ('NLS_LANGUAGE', 'NLS_SORT', 'NLS_COMP');

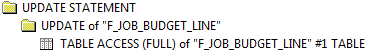

UPDATE F_JOB_BUDGET_LINE SET ACTUAL_COST=0

WHERE INSTANCE_KEY='JobBudgetLine00000000000004835665';

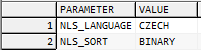

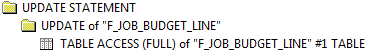

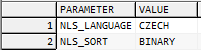

When the parameter NLS_LANGUAGE is changed, NLS_SORT is also changed. The index is not used when NLS_SORT=CZECH and NLS_COMP=ANSI.

ALTER SESSION SET NLS_LANGUAGE='CZECH';

SELECT PARAMETER, VALUE FROM V$NLS_PARAMETERS

WHERE PARAMETER IN ('NLS_LANGUAGE', 'NLS_SORT');

UPDATE F_JOB_BUDGET_LINE SET ACTUAL_COST=0

WHERE INSTANCE_KEY='JobBudgetLine00000000000004835665';

We can change NLS_SORT to BINARY to make the index used again. Alternatively we can set NLS_COMP to BINARY, this will have the same effect.

ALTER SESSION SET NLS_SORT='BINARY';

SELECT PARAMETER, VALUE FROM V$NLS_PARAMETERS

WHERE PARAMETER IN ('NLS_LANGUAGE', 'NLS_SORT');

UPDATE F_JOB_BUDGET_LINE SET ACTUAL_COST=0

WHERE INSTANCE_KEY='JobBudgetLine00000000000004835665';